As these devices are pressed to communicate with one another, our expensive server processors will become costly traffic lights bottlenecking enormous data flows. Today, we have persistent memory sitting in DIMM sockets, NVMe storage, and smart storage (SmartSSD) plugged directly on the PCIe bus, along with a variety of accelerator cards and SmartNICs or DPUs, some with vast memories of their own. With the kernel change, it’s now relatively straightforward for an accelerator to talk with PCIe/NVMe memory on the PCIe bus or another accelerator.Īs solutions become more complex, simple P2P will not be enough, and it will constrain solution performance.

While P2P existed before this kernel update, it required some serious magic to work, often requiring that you had programmatic control over both peer devices. This made it easier for one device on the PCIe bus to share data with another.

#CXL CACHE COHERENCE CODE#

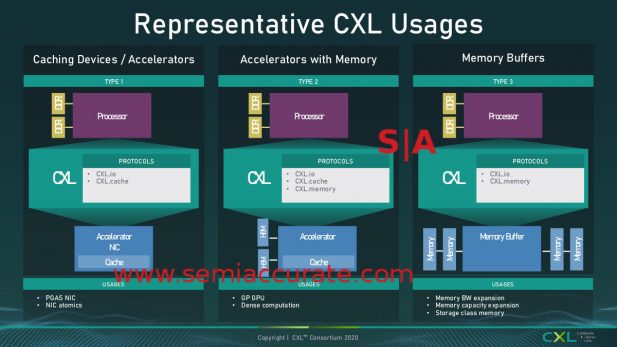

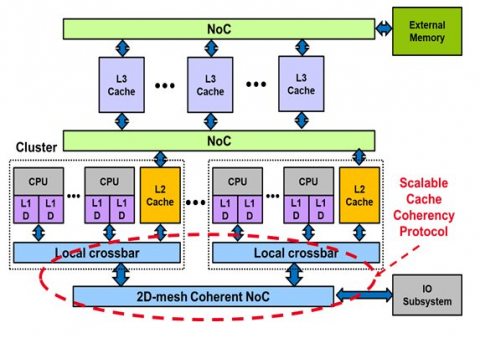

In 2018, the Linux kernel finally rolled in code to support PCIe peer-to-peer (P2P) mode. CXL goes a long way toward improving host communications with accelerators, but it doesn't address accelerator-to-accelerator communications on the PCIe bus. With CXL, these high-performance memories can now be shared with the host CPU, making it easier to operate on datasets in shared memory.įurthermore, for atomic transactions, CXL can share cache memory between the host CPU and the accelerator card. Some of these accelerators have sizable high-performance local memories using DRAM or high-bandwidth memory (HBM). This enables the host CPU to efficiently dispatch work to the accelerator and receive the product of that work. It provides a well-defined master-slave model where the CPU's root complex can share both cache and main system memory over a high-bandwidth link with an accelerator card (Fig. However, it also comes with an Aladdin's Lamp filled with promise in the form of two new protocols, Compute Express Link (CXL) and a Cache Coherent Interconnect for Accelerators (CCIX) to create efficient communication between CPUs and accelerators like SmartNIC or co-processors. If that's all the fifth generation of PCIe brought, that would be fine. Today, a fourth-generation PCIe x8 slot is suitable for about 16 GB/s, so the next generation will be roughly 32 GB/sec. Like me, most people, OK nerdy architects, know that with every PCIe generation, the speed has doubled. To save a buck, some server vendors even used x8 connectors, but only wired them for x4-boy, that was fun.

Back then, 16-lane (x16) slots were unheard of, and server motherboards often had only a few x8 slots and several x4 slots. The first-generation PCIe x8 bus was 2 GB in each direction. They could move nearly 1.25 GB/s in each direction, so the eight-lane (x8) PCIe bus couldn't have been timelier. Shortly after that, well-performing 10-GbE NICs (network interface controllers) emerged onto the scene. At this point, high-performance computing (HPC) networks like Myrinet and Infiniband had just pushed beyond GbE with data rates of 2 Gb/s and 8 Gb/s, respectively. Peripheral Component Interconnect Express, or PCIe, first appeared in 2003 and, coincidentally, arrived just as networking was getting ready to start the jump from Gigabit Ethernet (GbE) as the primary interconnect. Under the covers, the roadway has been PCI Express (PCIe), which has undergone significant changes but is mainly taken for granted. From trading stocks to genomic sequencing, computing is about getting answers faster. They boast huge FPGAs, multicore Arm clusters, or even a blend of both, with each of these advances bringing significant gains in solution performance.

As we turned another decade, GPUs went far beyond graphics, and we witnessed the emergence of field-programmable gate-array (FPGA)-based accelerator cards.Īs we're well into 2020, SmartNICs (network interface cards), also known as DPUs (data processing units), are coming into fashion. Then with the millennium, we first saw dual-socket and later multicore processors. In the 1990s, we went from single-socket standalone servers to clusters. We've jumped many chasms over the past three decades of server-based computing. This article is part of the Communication and System Design Series: Have SmartNIC - Will Compute

0 kommentar(er)

0 kommentar(er)